As discussed earlier, there have been concerns raised about the possibility of "functional unblinding" in the ENHANCE trial of the drug Vytorin (ezetimibe/simvastatin-combination).

As discussed earlier, there have been concerns raised about the possibility of "functional unblinding" in the ENHANCE trial of the drug Vytorin (ezetimibe/simvastatin-combination).As the first part of this exercise, let us take a look at the POWER of the ENHANCE trial. There is something slightly odd here (I think). The question is: Can Schering-Plough count?

Power, for neophytes out there, is the probability of rejecting a false statistical null hypothesis. The goal is to allow you to decide (in advance of an experiment)

- How many people (or things) you need to study to answer your question

- How likely it is that your statistical test will be able to detect an effect of a given size

- The precise question

- The kind of statistical test that will be used

- The size of the sample (bigger = more power)

- The size of the experimental effects you want to detect (bigger effects need a smaller sample)

- The amount of noise in the measurements (measurement error or biological variability)

![[Power statement]](http://www.thejabberwock.org/blog/2/pow1.png)

Although it is possible to perform power calculations taking account of covariates or confounders (such as baseline IMT, center and so on) this is difficult, and no estimated covariate parameters are provided. One therefore has to assume that the power calculations were carried out using conventional methods (a t-test). In the end Schering Plough recruited slightly more patients than their prior power calculation suggested they should recruit as a minimum. However this is not the issue here. They could also claim that this is just a bit of rounding error (see further discussion in comments). This is again irrelevant - the fact remains that it is not possible to add two and two from the numbers they give, or to resuscitate their rather simple calculations. This bypassed the entire system of safeguards that is supposed to check these little details.

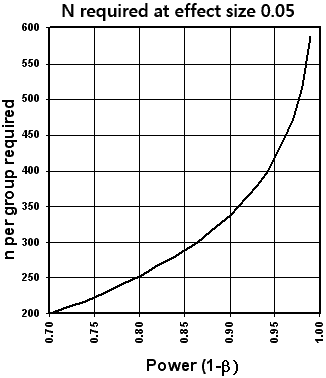

So on to the power. Well, as far as my humble brain can establish, Power for N=650 is in fact 88.9%, not 90%.

The required N for 90% Power, 2-tailed alpha 0.05, between group difference of 0.05mm and SD=0.20mm is not N=650.

It is N=674.

A small difference perhaps, but it does tell us something interesting about the process:

- Perhaps I can't count

- Perhaps Schering-Plough or Merck can't count

- Perhaps the authors of the protocol paper didn't read their paper

- Perhaps peer reviewers didn't back-check anything

- Perhaps there is something Merck or Schering-Plough aren't telling

- Perhaps Carrie Smith Cox can only count in units of $28 million

Reference

J. Kastelein, P. Sager, E. de Groot, E. Veltri (2005). Comparison of ezetimibe plus simvastatin versus simvastatin monotherapy on atherosclerosis progression in familial hypercholesterolemia. Design and rationale of the Ezetimibe and Simvastatin in Hypercholesterolemia Enhances Atherosclerosis Regression (ENHANCE) trial. Am Heart J. 2005 Feb;149(2):234-9.

See here for Collated Micro-Statistics Tutorials

Earlier|Later|Main Page

6 comments:

Perhaps I am missing your point, but according to the press release, the number of patients in the study was 720. So does it matter that they originally projected a lower number?

Also, a friend of mine told me that he calculated the SD from the treatment changes and the p values and that it was about 0.06 mm. So even if there were as they say a lot of outliers or biologically implausible IMT values, they still achieved a SD that was lower than they projected.

Any thoughts?

No this is not the issue. they did power the study (and did eventually study enough people) to show what they wanted to show (if it were true). Generally studies recruit more than the number specified by the power calculation in order to account for un-evaluable patients (dropouts etc).

This is a different point. Their prior power calculation was in error. They can't do math (or write comprehensible, reproducible science).

To add to your comment Marilyn (on the separate issue) - Yes what you say is correct on the SD. They give N=720, a difference in change of (0.0111-0.0058) in the wrong direction and P=0.29. It is possible to do an approximate reverse t-test (assuming that is what they did), and get an SD of around 0.06

- which is lower than their original estimate

- making the claim of "lots of outliers" implausible.

I am simply pointing out a sloppy error. The original 650 was not "a projection" of numbers. It was the required minimum number to achieve a given power given their prior assumptions.

Dr Blumsohn, I have checked your calculations.

You are correct that their numbers are in error. I suspect they might say it is just a bit of rounding error - after all, what are 25 patients between friends.

It is surprising that this was noticed before (by reviewers or writers) as you suggest.

Just send in the clowns

:) :)

Yes they could claim rounding error (accounting for the 25 missing patients). In fact an assumed SD of 0.196 (just making it in terms of rounding) would just account for their difference. However they did not give a value of 0.196, and if they did, they would need to account for the origin of that odd estimate.

The issue remains - the calculations they presented as a prior estimate of N required are not internally consistent, and not reproducible. Its a small thing, but it gives some insight into the way in which the process works. It also bypassed all scrutiny. If I were a peer reviewer (or an author) I would have spent five minutes trying to repeat the calculation given the information supplied, but clearly no-one did.

Or maybe my estimate of power (from the assumptions provided) is wrong. I don't think so.

Post a Comment